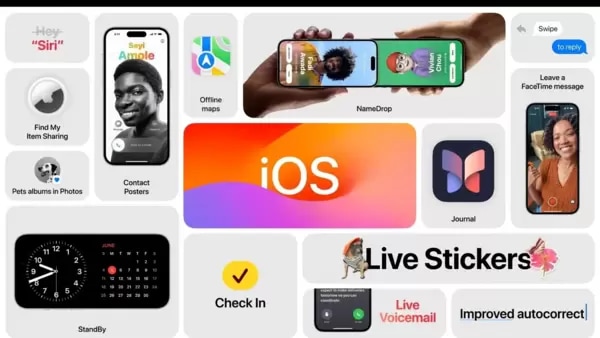

5 things about AI you may have missed today: Meta implements new policies to combat deepfakes, China may misuse AI, more

26 days ago | 5 Views

Meta implements new policies to combat deepfakes; AI-powered diabetes program revolutionises health management; AI-powered diabetes program revolutionises health management; Study Reveals Racial Bias in AI Chatbots- this and more in our daily roundup. Let us take a look.

1. Meta implements new policies to combat deepfakes

Meta, the parent company of Facebook, implements new policies addressing deepfakes and altered media. It will introduce "Made with AI" labels for AI-generated content, expanding to include videos, images, and audio. Additionally, more prominent labels will identify manipulated content posing high deception risks. Meta shifts from content removal to transparency, aiming to inform viewers about the content's creation process, according to a report by Reuters.

2. China may misuse AI in global elections; warns Microsoft

China may employ AI-generated content on social media to influence elections in countries like India and the US, asserts Microsoft. Despite low immediate impact, China's increasing use of AI in manipulating content poses long-term risks. North Korea is also implicated, in using AI to enhance its operations and engage in cybercrimes, according to the latest report by Microsoft Threat Analysis Center, PTI reported.

3. AI-powered diabetes program revolutionises health management

A groundbreaking AI-driven diabetes program, endorsed by experts, offers personalised advice to combat chronic metabolic diseases, particularly during religious fasts. TWIN Health's innovative Whole Body Digital Twin technology creates personalised nutrition plans, aiding blood sugar control. Experts hail it as revolutionary, potentially transforming diabetes management, especially during fasting periods like Ramadan, by providing data-driven insights for informed health decisions, PTI reported.

4. Study Reveals Racial Bias in AI Chatbots

AI chatbots exhibit racial biases, favouring white-sounding names over Black ones, warns a Stanford Law School study. For instance, a job candidate named Tamika may receive a lower salary recommendation compared to a candidate named Todd. The study underscores inherent risks in biased AI, particularly in hiring processes, as companies integrate AI into operations, potentially perpetuating stereotypes and inequalities, USA Today reported.

5. Mumbai Professor falls victim to AI-driven Police impersonation scam

A Mumbai professor loses ?1 lakh to a fraudster posing as a police officer who used AI to gather personal details from social media. The scammer claimed her son was detained, threatening arrest unless money was transferred. Cyber experts warn of rising AI-based frauds preying on emotions. Police are investigating the case involving this new modus operandi, Times of India reported.

Read Also: oneplus 12r: oneplus' most powerful ?r' model with aqua touch